Helping AI engineers evaluate generative models up to 180x faster

Technology Investment FundAs AI becomes embedded in everyday tools and decisions, ensuring the safety and reliability of large language models (LLMs) is more critical than ever. Trismik, a University of Cambridge spinout, is pioneering a new standard in LLM evaluation, making AI testing faster, smarter and more trustworthy.

LLMs are increasingly powering more products, but testing their safety, reliability and performance has become a critical bottleneck. Current evaluation methods simply don’t scale.

Traditional LLM testing is slow, manual and inconsistent, making it difficult for teams to iterate quickly or trust their results.

Trismik solves this by offering an adaptive evaluation platform that rigorously tests models for real-world risks like hallucinations, bias and factual inaccuracy – at a fraction of the time and cost.

“AI is no longer just generating answers, it's shaping decisions, products and lives. If we want trustworthy AI, we need to treat evaluation as seriously as we take training.”

Professor Nigel Collier, continues:

“Trismik aims to lead that charge by giving AI engineers the tools to test with precision, act with confidence and build with integrity.”

A faster, smarter way to evaluate AI models

Trismik’s technology uses adaptive testing and automatic scoring to evaluate models on dimensions like factuality, bias and toxicity. Inspired by psychometrics and machine learning, the system dynamically selects the most informative test cases, dramatically reducing the number of datapoints required while achieving high reliability and enabling faster development cycles.

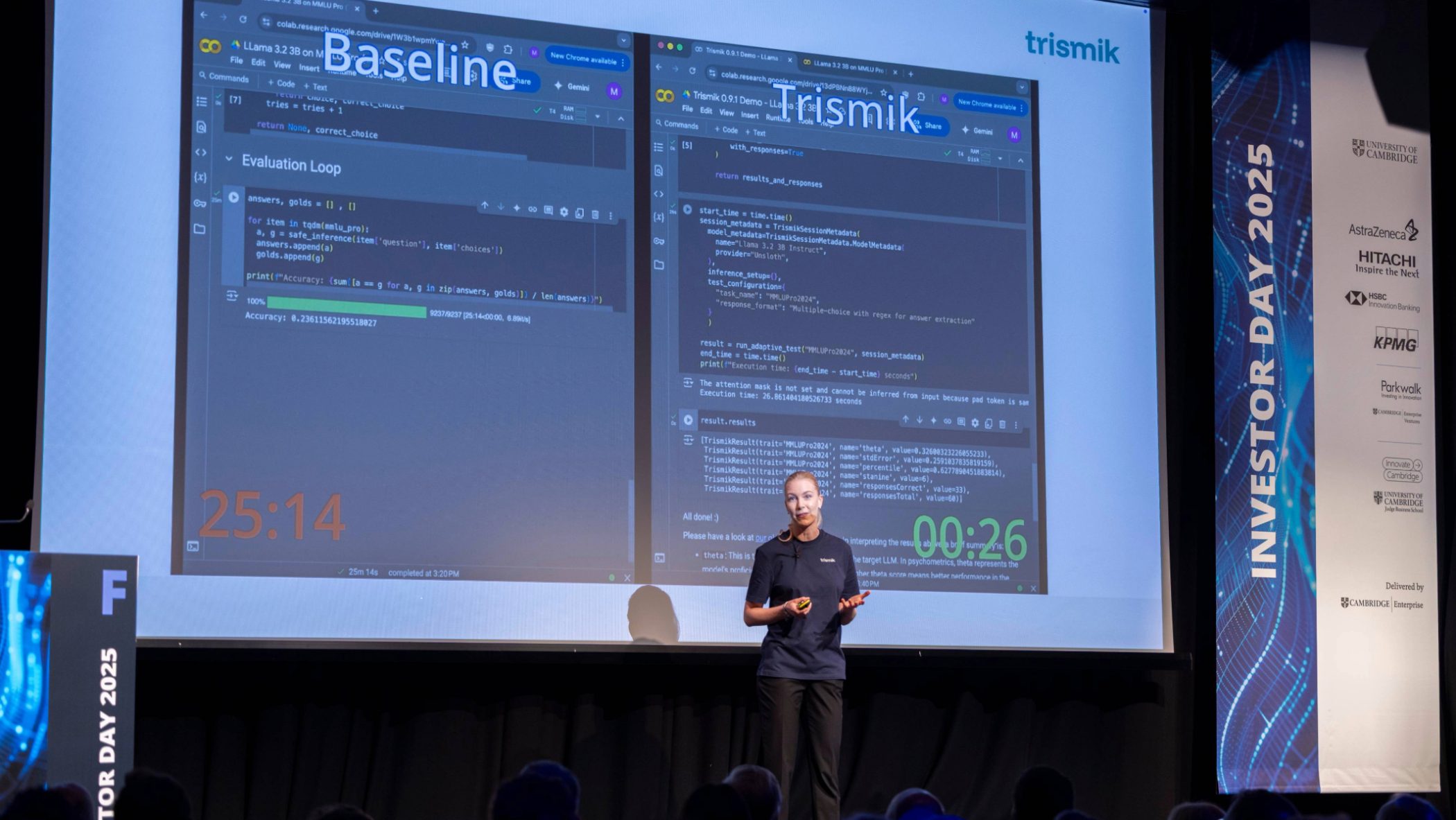

For example, Trismik’s evaluation of Llama 3.2B using MMLU Pro dropped from over 25 minutes to just 26 seconds – a 98% reduction. On larger models the time saving would be far greater.

Rebekka Mikkola, Co-founder and CEO of Trismik, pitching at the 2025 Founders at the University of Cambridge Investor Day

From Cambridge research to commercial impact

The idea originated in Professor Nigel Collier’s lab based in the Department of Theoretical and Applied Linguistics, where work on AI language understanding and safety exposed the limitations of current evaluation methods. The team saw an opportunity to radically improve how large language models are tested – by applying psychometric principles and adaptive systems to make evaluation faster and more scalable.

With early support from Cambridge Enterprise in 2022, the idea moved quickly from lab to venture. The team joined the Ideas Incubator in 2023, ran a student-led software bootcamp to explore prototypes and began early consultancy collaborations with Toshiba. In 2024, they secured a £97K Technology Investment Fund (TIF) award, alongside support for developing its first software MVP. Co-founded by CEO Rebekka Mikkola and Chief Scientist Professor Nigel Collier, in early 2025 Trismik joined the Founders at the University of Cambridge START 2.0 accelerator programme, and by May, signed a licence agreement with Cambridge Enterprise. That same month, Marco Basaldella joined as CTO and third co-founder, bringing experience from Amazon where he worked on foundation models and evaluation. Marco previously worked with Nigel as a postdoctoral researcher at the University of Cambridge, adding deep technical continuity to the founding team.

Since spinning out, Trismik has raised pre-seed funding, onboarded early enterprise partners and launched its first platform version – already delivering results up to 180x faster than classical testing.

The company continues to work closely with The Language Technology Lab at Cambridge and partners across media, telecom and tech to validate its platform in high-stakes environments. With a full product launch planned for September 2025, Trismik is expanding commercial pilots, growing its team and building a developer-friendly SDK and test library – all with the goal of setting the gold standard in adaptive LLM testing, so engineers can test with precision and act with confidence.

Image Credit: David Johnson